Unlocking Efficiency and Scale: The Mixture of Experts (MoE) and Sparse MoE (SMoE) Architectures in Mistral 8x7B

Think of a hospital with a team of specialist doctors — each doctor is an expert in a different area, such as cardiology, neurology, or orthopedics. When a patient comes in with a health issue, they are referred to the specialist who is best suited to diagnose and treat that specific problem. Only the relevant doctors are consulted, making the diagnosis and treatment process faster and more accurate.

MoE Analogy: The MoE model functions like this hospital, where each “doctor” is an expert in processing a particular type of input data. The “patient’s problem” is routed to the appropriate experts, optimizing the model’s performance and resource use.

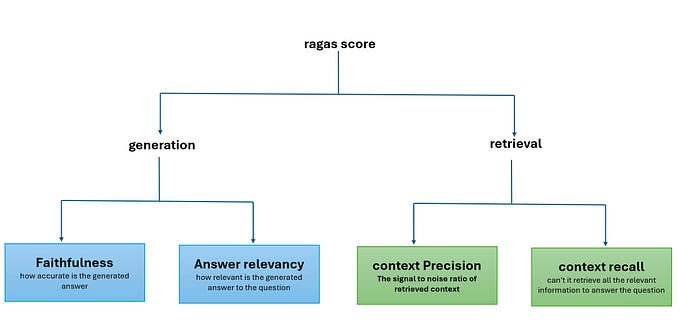

Mixture of Experts (MoE) Architecture:

Mixture of Experts (MoE) is a neural network architecture that dynamically selects and activates only a subset of its components (called “experts”) for processing each input. This approach aims to increase the model’s capacity without a corresponding increase in computational costs. MoE architecture involves multiple “experts,” each of which is a separate neural network (or a part of one). The model uses a gating mechanism to decide which experts should be used for each specific input.

Key Concepts:

Experts: These are individual neural networks or sub-networks within the MoE. Each expert is specialized in handling certain types of data or tasks.

Gating Network: A small neural network that determines which experts are activated for a given input. It outputs a probability distribution over the experts, and typically, only the top-k experts are selected.

Dynamic Routing: Instead of using all experts for every input, MoE dynamically routes each input to a subset of experts, reducing computational costs while maintaining high model capacity.

Scalability: MoE architectures can scale to very large sizes (with many experts), as only a few experts are activated at a time, leading to efficient use of resources.

Sparse Mixture of Experts (Sparse MoE)

Sparse Mixture of Experts (Sparse MoE) is a variant of the standard MoE model that focuses on further reducing the computational complexity by sparsely activating experts. In Sparse MoE, instead of selecting and activating all experts for a given input, only a small subset of experts is activated, hence the term “sparse.”

Characteristics:

Sparse Activation: Only a few experts are chosen to process each input, which leads to reduced computational overhead. This sparsity is controlled by the gating network, which ensures that only the most relevant experts are activated.

Efficiency: By activating only a small subset of experts, Sparse MoE models can handle large-scale tasks efficiently without the need for proportional increases in computational resources.

Scalability with Efficiency: Sparse MoE models can scale to extremely large sizes (with potentially billions of parameters) while maintaining efficiency, making them suitable for tasks requiring high model capacity but limited computational resources.

Mistral 8x7B Model

Mistral 8x7B is an advanced implementation of the Sparse MoE architecture developed by Mistral AI. The “8x7B” in the name refers to the architecture’s structure, where “8” indicates the number of expert layers, and “7B” refers to the total number of parameters in billions across all experts.

Key Features:

Sparse Mixture of Experts: The Mistral 8x7B model utilizes the Sparse MoE architecture to balance computational efficiency with high model capacity, enabling it to handle large-scale language modeling tasks effectively.

Expert Layers: The model has 8 layers of experts, with each layer consisting of multiple expert networks. Only a subset of these experts is activated for any given input, based on the gating mechanism.

Scalability: Despite having 7 billion parameters, the sparse nature of the model allows it to operate efficiently, making it suitable for both training and inference on large datasets without requiring excessive computational power.

High Performance: Mistral 8x7B is designed to deliver high performance across a wide range of language tasks, leveraging its large parameter count and efficient sparse architecture.

How does Mistral 8x7B is differ from GPT Models?

The Mistral 8x7B model and GPT models, such as GPT-3 and GPT-4, are both advanced neural networks designed for natural language processing tasks. However, they differ significantly in architecture, scalability, efficiency, and approach. Here’s a breakdown of how Mistral 8x7B differs from GPT models:

1. Architecture: Sparse Mixture of Experts (MoE) vs. Dense Transformers

Mistral 8x7B: Utilizes the Sparse Mixture of Experts (Sparse MoE) architecture, where only a subset of the model’s experts (specialized neural networks) are activated for each input. This approach allows the model to maintain high capacity while using computational resources efficiently.

GPT Models: Use a dense transformer architecture, where all model parameters are involved in processing every input. This results in high computational costs as the model scales, but ensures that the entire model is used for each task, which can enhance performance in certain scenarios.

2. Scalability and Efficiency

Mistral 8x7B: The sparse nature of the MoE architecture enables the model to scale to a very large number of parameters without a corresponding increase in computational requirements. Only a small subset of experts is used for each task, making the model more computationally efficient and scalable to larger sizes.

GPT Models: Scaling GPT models typically involves adding more layers and parameters, leading to increased computational costs. While this scaling can improve performance, it requires significant computational resources, especially for training and inference in larger models like GPT-3 or GPT-4.

3. Dynamic Routing vs. Uniform Processing

Mistral 8x7B: Employs a dynamic routing mechanism via a gating network, which decides which experts to activate based on the input. This means the model can tailor its processing resources to the specific needs of the task, leading to more efficient use of its parameters.

GPT Models: Process every input uniformly through the entire network, regardless of the specific characteristics of the input. While this can provide consistent performance across different tasks, it is less efficient in terms of resource utilization.

4. Model Capacity vs. Computational Cost

Mistral 8x7B: Achieves high model capacity through the MoE architecture while keeping computational costs low. By activating only relevant experts, the model can handle complex tasks without the need for excessive computational power, making it more suitable for large-scale applications with resource constraints.

GPT Models: High model capacity in GPT models is directly linked to increased computational costs. As these models grow in size, they require more computational resources, which can be a limiting factor in practical deployment, especially for large models like GPT-3.

5. Specialization of Experts

Mistral 8x7B: Each expert within the model is specialized in handling certain types of data or tasks, allowing for more precise and efficient processing of inputs. This specialization can lead to better performance on specific tasks where certain experts are particularly well-suited.

GPT Models: Do not have specialized components; instead, they use a uniform approach where every part of the model is involved in processing all types of data. This can lead to strong generalization across a wide range of tasks but might not be as efficient as the expert-based approach of Mistral 8x7B.

6. Training and Inference Efficiency

Mistral 8x7B: The sparse activation mechanism allows for more efficient training and inference, as only a portion of the model is used at any given time. This reduces the overall computational burden and makes the model more suitable for real-time or large-scale applications.

GPT Models: Training and inference in GPT models are more resource-intensive because the entire model is used for each input. This can slow down processing times and increase the cost of deployment, particularly for larger models.

7. Use Cases and Applications

Mistral 8x7B: Due to its scalable and efficient architecture, Mistral 8x7B is particularly well-suited for tasks that require handling large-scale data with limited computational resources. It is ideal for scenarios where efficiency and scalability are critical, such as in industrial applications or research environments.

GPT Models: GPT models are versatile and can be applied to a broad range of NLP tasks, from text generation to translation and question answering. They are particularly effective in scenarios where high-quality, general-purpose language understanding is required, but may be limited by their computational demands.

Conclusion

The Mixture of Experts (MoE) and its sparse variant, Sparse MoE, represent a significant advancement in neural network architecture, allowing models to scale to large sizes without a proportional increase in computational cost. The Mistral 8x7B model exemplifies the application of Sparse MoE, offering a powerful yet efficient solution for large-scale language tasks, making it a notable model in the landscape of generative AI.